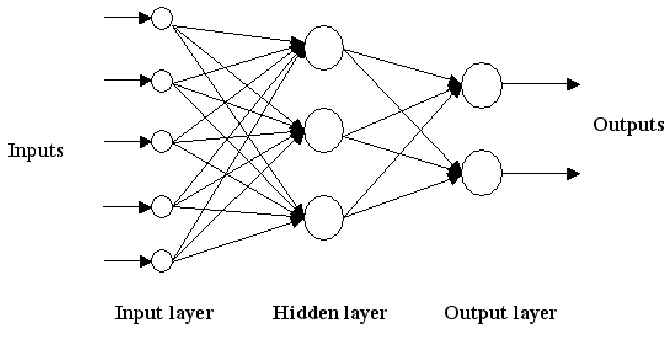

Neural networks, inspired by the human brain’s interconnected neurons, represent a powerful class of machine learning algorithms designed to recognize patterns, make predictions, and learn from data. These artificial neural networks (ANNs) consist of interconnected nodes, or artificial neurons, organized into layers. The three fundamental types of layers are the input layer, hidden layers, and output layer.

Key Components:

1. Neurons:

- Input Neurons: Receive input data.

- Hidden Neurons: Process information.

- Output Neurons: Produce the final output.

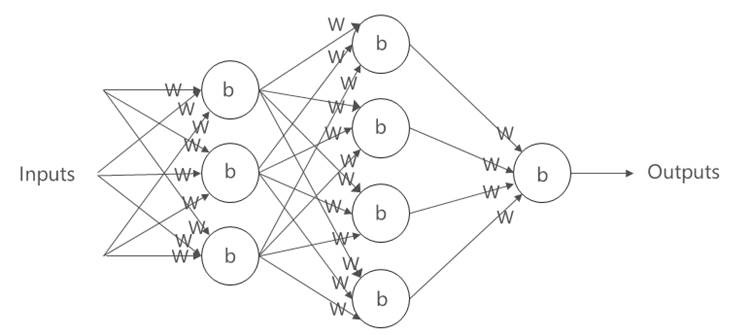

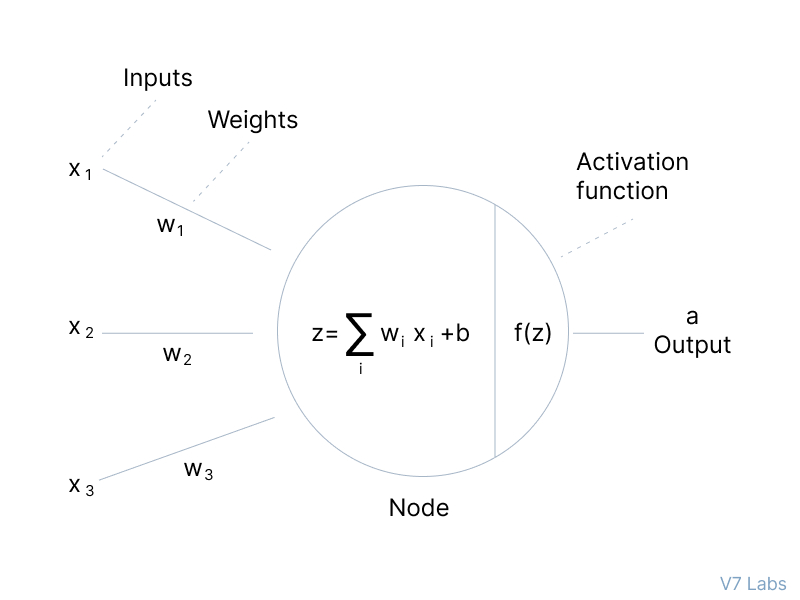

2. Weights:

- Each connection between neurons has an associated weight that adjusts during training to strengthen or weaken the signal.

3. Activation Function:

- Introduces non-linearity to the model, allowing it to learn complex patterns.

4. Layers:

- Input Layer: Receives initial data.

- Hidden Layers: Process information. Deep networks have multiple hidden layers.

- Output Layer: Produces the final output.

Working Mechanism:

- Forward Propagation:

- Input data is fed into the input layer.

- The signal passes through the hidden layers via weighted connections, with activation functions introducing non-linearity.

- The output layer produces the final result.

- Loss Function:

- Compares the model’s output to the actual target, quantifying the error.

- Backpropagation:

- Error information is propagated backward through the network.

- Weights are adjusted using optimization algorithms (e.g., gradient descent) to minimize the error.

- Training:

- The process of adjusting weights iteratively to improve the network’s ability to generalize from training data to unseen data.

Types of Neural Networks:

- Feedforward Neural Networks (FNN):

- Information flows in one direction, from input to output.

- Recurrent Neural Networks (RNN):

- Neurons have connections to previous layers, enabling the network to retain information about previous inputs.

- Convolutional Neural Networks (CNN):

- Specialized for processing grid-like data, such as images. Utilizes convolutional layers to extract features.

- Generative Adversarial Networks (GAN):

- Comprises a generator and a discriminator, trained adversarially to generate realistic data.

- Long Short-Term Memory Networks (LSTM):

- A type of RNN with enhanced memory capabilities, suitable for sequence data.

Applications:

Neural networks find application in various domains, including:

- Image and speech recognition

- Natural language processing

- Autonomous vehicles

- Medical diagnosis

- Financial forecasting

- Gaming and entertainment

Challenges and Future Directions:

- Interpretability:

- Understanding the decision-making process of complex neural networks.

- Computational Resources:

- Training deep networks requires significant computational power.

- Ethical Considerations:

- Addressing biases and ensuring fairness in neural network outputs.

Neural networks continue to evolve, with ongoing research focusing on improving efficiency, interpretability, and adaptability to new challenges.