Introduction

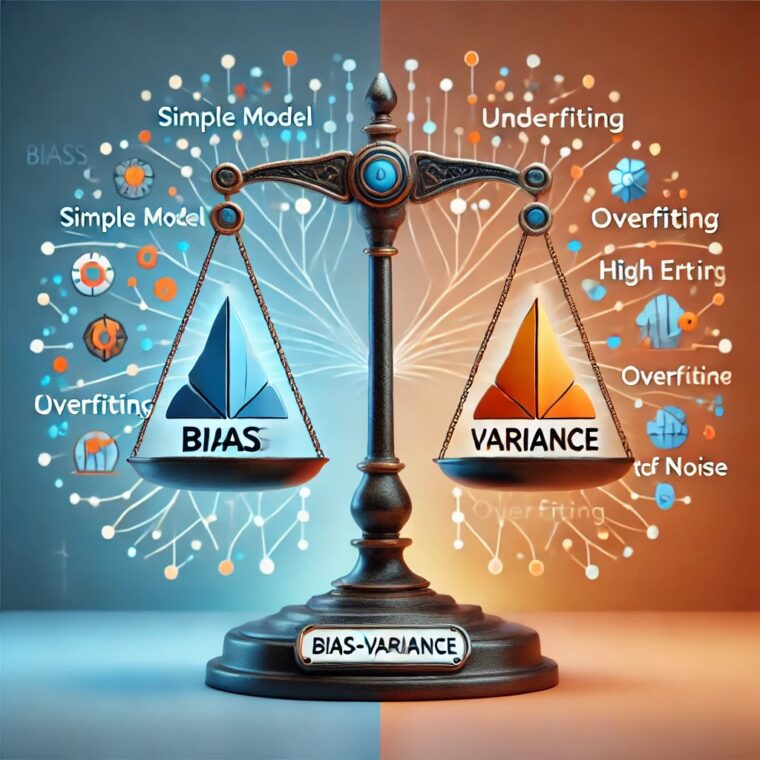

Bias and variance are two fundamental sources of error in machine learning models. Striking the right balance between them is crucial for building models that generalize well to unseen data. This article delves into the concepts of bias and variance, provides real-world examples, and offers a comparative analysis to better understand their impact.

What is Bias in Machine Learning?

Definition

Bias is the error introduced by approximating a real-world problem with a too simplistic model. High bias models often make strong assumptions about data, leading to underfitting, where the model fails to capture important patterns.

Real-World Example of Bias

- Predicting House Prices: If a model predicts house prices based only on the number of bedrooms, ignoring factors like location, square footage, and amenities, it will have high bias. It oversimplifies the relationship and leads to poor predictions.

- Facial Recognition Systems: A facial recognition model trained only on a limited demographic dataset may fail to recognize diverse faces, leading to biased predictions.

Effects of High Bias

- Poor performance on both training and test data.

- Model fails to learn important relationships.

- Predictions are overly generalized.

High bias: The model makes strong assumptions and is too simple.

Low bias: The model captures the complexities of the data well.

What is Variance in Machine Learning?

Definition

Variance is the error introduced by a model being too sensitive to small fluctuations in the training data. High variance models tend to overfit the training data, capturing noise rather than general patterns.

Real-World Example of Variance

- Stock Market Predictions: If a model tries to predict stock prices using every minor fluctuation in historical data, it may overfit to short-term noise and fail to predict future trends.

- Medical Diagnosis: A machine learning model trained on a limited dataset may memorize specific cases but fail to diagnose new cases correctly.

Effects of High Variance

- Excellent performance on training data but poor performance on test data.

- Model memorizes data instead of learning general patterns.

- Fails to generalize well to unseen data.

High variance: The model memorizes the training data instead of learning general patterns.

Low variance: The model generalizes well to new data

Bias-Variance Tradeoff: Finding the Right Balance

The ultimate goal of machine learning is to find a model that neither underfits nor overfits but generalizes well to new data. This requires balancing bias and variance.

Bias-Variance Tradeoff

A good ML model balances bias and variance:

| Bias | Variance | Model Behavior |

|---|---|---|

| High | Low | Underfitting |

| Low | High | Overfitting |

| Moderate | Moderate | Best Generalization |

🔹 Solution:

- Use simpler models (like linear regression) to reduce variance.

- Use regularization (like L1/L2 penalties) to control complexity.

- Collect more data to reduce overfitting.

This balance ensures the model performs well on both training and unseen data

Comparative Analysis of Bias and Variance

| Feature | High Bias | High Variance |

|---|---|---|

| Model Complexity | Low (simple model) | High (complex model) |

| Underfitting/Overfitting | Underfitting | Overfitting |

| Training Error | High | Low |

| Test Error | High | High |

| Example | Linear regression with few features | Deep neural network trained on limited data |

Strategies to Reduce Bias and Variance

Reducing Bias (Handling Underfitting)

- Use a More Complex Model: Switch from a simple linear regression to polynomial regression.

- Add More Features: Incorporate additional relevant variables into the model.

- Reduce Regularization: Reduce penalties (like L1/L2 regularization) to allow more flexibility in learning.

Reducing Variance (Handling Overfitting)

- Use a Simpler Model: Reduce model complexity to avoid capturing noise.

- Increase Training Data: More data helps the model learn general patterns instead of memorizing.

- Regularization Techniques: Apply L1 (Lasso) or L2 (Ridge) regularization to penalize overly complex models.

- Cross-Validation: Use k-fold cross-validation to evaluate model performance on different data subsets.

Conclusion

Bias and variance are two crucial aspects of machine learning models. High bias leads to underfitting, while high variance leads to overfitting. The key to building an effective model is to balance these two factors. By applying the right techniques, such as increasing data size, feature engineering, and regularization, one can develop a model that generalizes well and makes accurate predictions. Understanding the bias-variance tradeoff is essential for every data scientist and machine learning practitioner