Introduction

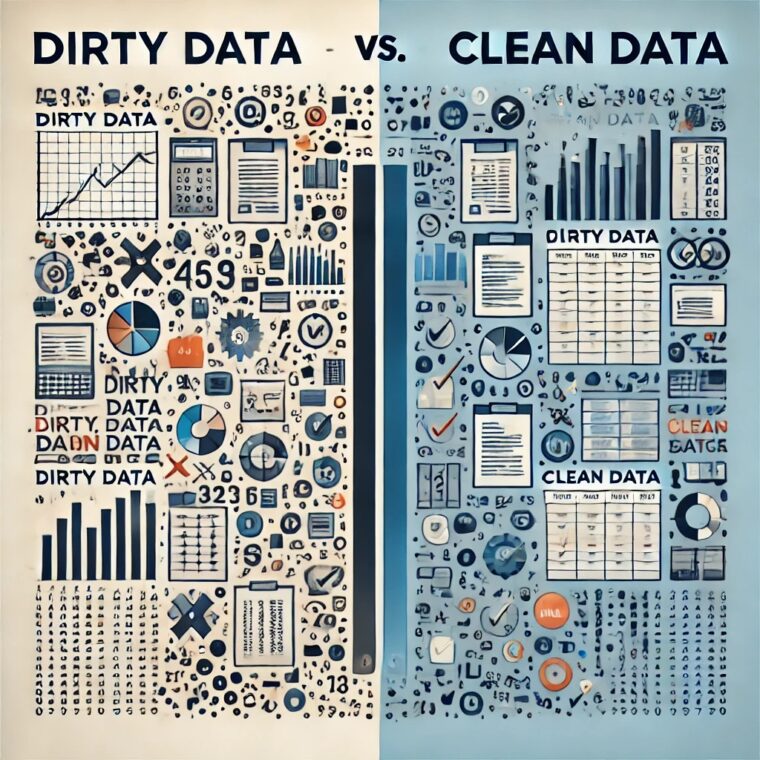

Data is the foundation of machine learning and data analysis. The accuracy of a model heavily depends on the quality of the data used for training. In the world of data science, we often deal with two types of data: dirty data and clean data. Understanding these concepts is crucial for building accurate, reliable, and effective models.

What is Dirty Data?

Definition

Dirty data refers to data that is inaccurate, incomplete, inconsistent, or contains errors. It can arise from various sources, such as human errors, faulty data collection methods, or system failures.

Types of Dirty Data

- Missing Data – Some values are absent in the dataset.

- Duplicate Data – The same records appear multiple times.

- Inconsistent Data – Different formats or representations of the same information.

- Incorrect Data – Wrong values due to human errors or system malfunctions.

- Outliers – Extreme values that may distort the analysis.

- Irrelevant Data – Data that is unnecessary for analysis or model training.

Real-World Examples of Dirty Data

- E-commerce: A customer’s address is stored as “NY” in one entry and “New York” in another, causing inconsistency.

- Healthcare: Missing patient age or incorrect spelling of disease names in medical records.

- Finance: Duplicate bank transactions leading to miscalculated account balances.

What is Clean Data?

Definition

Clean data is well-structured, accurate, and properly formatted. It is free from errors, inconsistencies, and missing values, making it ideal for analysis and machine learning.

Characteristics of Clean Data

- Complete – No missing values.

- Consistent – Standardized formats and correct spellings.

- Accurate – True representation of real-world information.

- Unique – No duplicate records.

- Relevant – Only contains necessary information.

Real-World Examples of Clean Data

- E-commerce: All product prices are correctly formatted, and customer details are verified.

- Healthcare: Patient records contain accurate age, diagnosis, and treatment details.

- Finance: Bank transactions are correctly recorded, preventing duplicate entries.

How to Clean Dirty Data?

Methods for Cleaning Data

- Handling Missing Data: Fill missing values using mean, median, mode, or predictive models.

- Removing Duplicates: Identify and delete duplicate records.

- Standardizing Formats: Convert inconsistent formats into a standard representation (e.g., ‘NY’ to ‘New York’).

- Correcting Errors: Validate and fix incorrect spellings, numbers, and misplaced entries.

- Outlier Detection: Use statistical methods or visualization tools to identify and handle outliers.

- Removing Irrelevant Data: Filter out unnecessary columns or values that do not contribute to analysis.

Tools for Data Cleaning

- Pandas (Python) – Used for handling missing values and cleaning data.

- OpenRefine – Helps with data transformation and standardization.

- Excel – Basic tool for manual data cleaning and formatting.

Dirty Data vs. Clean Data

| Category | Dirty Data Example | Clean Data Example |

|---|---|---|

| Missing Data | Customer age is missing in some records. | All customer age fields are properly filled. |

| Duplicate Data | The same order appears twice in the database. | Duplicate orders are removed. |

| Inconsistent Data | Dates formatted differently (e.g., ’01/02/2024′ vs. ‘2024-02-01’). | All dates follow the same format (YYYY-MM-DD). |

| Incorrect Data | A product price listed as $999 instead of $9.99. | Corrected price values. |

| Outliers | A student’s test score recorded as 999. | Unusual values are identified and corrected. |

| Irrelevant Data | A dataset about customers includes their favorite movie, which is not needed for analysis. | Only relevant features are retained. |

Conclusion

Dirty data can lead to inaccurate predictions, poor decision-making, and inefficiencies in machine learning models. Cleaning data is an essential step in data preprocessing that ensures high-quality insights and better model performance. By identifying and correcting errors, standardizing formats, and handling missing values, we can transform dirty data into clean data, making it suitable for analysis and machine learning applications.